I would like to start here a research thread of the long-promised Polymath3 on the polynomial Hirsch conjecture.

I propose to try to solve the following purely combinatorial problem.

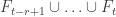

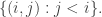

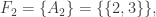

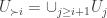

Consider t disjoint families of subsets of {1,2,…,n}, .

Suppose that

(*) For every , and every

and

, there is

which contains

.

The basic question is: How large can t be???

(When we say that the families are disjoint we mean that there is no set that belongs to two families. The sets in a single family need not be disjoint.)

In a recent post I showed the very simple argument for an upper bound . The major question is if there is a polynomial upper bound. I will repeat the argument below the dividing line and explain the connections between a few versions.

A polynomial upper bound for will imply a polynomial (in

) upper bound for the diameter of graphs of polytopes with

facets. So the task we face is either to prove such a polynomial upper bound or give an example where

is superpolynomial.

The abstract setting is taken from the paper Diameter of Polyhedra: The Limits of Abstraction by Freidrich Eisenbrand, Nicolai Hahnle, Sasha Razborov, and Thomas Rothvoss. They gave an example that can be quadratic.

We had many posts related to the Hirsch conjecture.

Remark: The comments for this post will serve both the research thread and for discussions. I suggested to concentrate on a rather focused problem but other directions/suggestions are welcome as well.

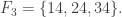

Let’s call the maximum t, f(n).

Remark: If you restrict your attention to sets in these families containing an element m and delete m from all of them, you get another example of such families of sets, possibly with smaller value of t. (Those families which do not include any set containing m will vanish.)

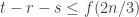

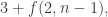

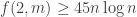

Theorem: .

Proof: Consider the largest s so that the union of all sets in is at most n/2. Clearly,

.

Consider the largest r so that the union of all sets in is at most n/2. Clearly,

.

Now, by the definition of s and r, there is an element m shared by a set in the first s+1 families and a set in the last r+1 families. Therefore (by (*)), when we restrict our attention to the sets containing ‘m’ the families all survive. We get that

. Q.E.D.

Remarks:

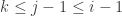

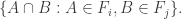

1) The abstract setting is taken from the paper by Eisenbrand, Hahnle, Razborov, and Rothvoss (EHRR). We can consider families of d-subsets of {1,2,…, n}, and denote the maximum cardinality t by . The argument above gives the relation

, which implies

.

2) (and thus also

) are upper bounds for the diameter of graphs of d-polytopes with n facets. Let me explain this and also the relation with another abstract formulation. Start with a

-polytope with

facets. To every vertex v of the polytope associate the set

of facets containing

. Starting with a vertex

we can consider

as the family of sets which correspond to vertices of distance

from $w$. So the number of such families (for an appropriate

is as large as the diameter of the graph of the polytope. I will explain in a minute why condition (*) is satisfied.

3) For the diameter of graphs of polytopes we can restrict our attention to simple polytopes namely for the case that all sets have size

.

4) Why the families of graphs of simple polytopes satisfy (*)? Because if you have a vertex of distance

from

, and a vertex

at distance

. Then consider the shortest path from

to

in the smallest face

containing both

and

. The sets

for every vertex

in

(and hence on this path) satisfies

. The distances from

of adjacent vertices in the shortest path from

to

differs by at most 1. So one vertex on the path must be at distance

from

.

5) EHRR considered also the following setting: consider a graph whose vertices are labeled by subsets of {1,2,…,n}. Assume that for every vertex v labelled by S(v) and every vertex u labelled by S(u) there is a path so that all vertices are labelled by sets containing

. Note that having such a labelling is the only properties of graphs of simple

-polytopes that we have used in remark 4.

Pingback: Brawer Hirsch And Assoc Pa lawyer in Fort Lauderdale | Florida Personal Injury Lawyer

Dear Gil,

I’ve only recently thought about this problem again, so let me just throw some thoughts out there. I have been considering a variant of this problem where instead of sets one allows multisets of fixed cardinality d, or equivalently monomials of degree d over n variables.

In this setting, there is actually a very simple construction that gives a lower bound of d(n-1) + 1, the sequence of multisets (for the case d=4 but it easily generalizes):

1111, 1112, 1122, 1222, 2222, 2223, etc.,

Note that here we have a family of multi-sets where each family in fact only contains a single multi-set. There are alternative constructions that achieve the same “length” without singletons, e.g. you can also partition all d-element multisets into families and achieve d(n-1) + 1.

My current guess would be that this construction is best possible, i.e. I would conjecture d(n-1) + 1 to be an upper bound.

This upper bound holds for all _partitions_ of the d-multisets into families, i.e. it holds in the case where every multi-set appears in exacly one of the families, via a simple inductive argument: Take one multiset from the first family and one of the last, then take a (d-1)-subset of the first and one element of the last. The union is a d-multiset that must appear in one of the families, proceed by induction on the “dimension” d.

So to disprove my guess for the upper bound would require cleverly _not using_ certain multisets somehow.

The upper bound holds for n <= 3 and all d, d <= 2 and all n, and – provided that I made no mistake in my computer codes – it also holds for:

– n=4 and d<= 13

– n=5 and d<= 7

– n=6 and d<= 5

– n=7 and d<=4

– n=8 and d=3

Yes, the order of n and d is correct. I used SAT solvers to look for counter-examples to my hypothesis, and I stopped when I reached instances that ran out of memory after somewhere between one and two weeks of computation.

The case that bugs me the most personally is that I could not prove anything for d=3, where the best upper bound I know of is essentially 4n (via the Barnette/Larman argument for linear diameter in fixed dimension). Though it would be also interesting to think about what can be said about fixing n=4.

I have not given the problem any thought yet in the form that you stated it; I will give it a try, maybe it leads to some ideas that I haven't thought of in the "dimension-constrained" version that I looked at.

Dear Nicolai,

This is very interesting! Pleas do elaborate on the various general constructions giving d(n-1)+1. The argument that it is tight for families which contains all elements is also interesting. Can’t you get even better constructions for multisets based on the ideas from your paper?

Here’s a construction giving d(n-1)+1 that partitions the set of d-multisets. Take the groundset of n elements to be {0,1,2,…,n-1} and define for each multiset S the value s(S) to be the sum of its elements. Then the preimages of the numbers 0 through d(n-1) partition the multisets into families with the desired property.

This is the only other general construction I have. There are other examples for small cases that I found, and it seems like in general there should be many such examples, though so far I can only back this up with fuzzy feelings.

As for the constructions of the paper, interestingly it turns out that the two variants (with sets and with multisets) are in a sense asymptotically equivalent. Basically, what we do in the paper can be interpreted in the following way.

You start with the simple multiset construction that I outlined in my earlier post using {1,2,…,n} as your basic set. Then you get a set construction on the set {1,2,…,n}x{1,2,…,m} by replacing each of the multisets in the original by one of the blocks that we construct in the paper using disjoint coverings. It turns out that this can be generalized to starting with arbitrary multisets.

What you get is a result somewhat like this: if f(d,n) is the upper bound in the sets variant and f'(d,n) is the upper bound on the multisets variant, then of course f(d,n) <= f'(d,n) just by definition, and by the construction f'(d,nm) <= DC(m) f(d,n), where the DC(m) part is essentially the number of disjoint coverings you can find, as this determines the "length" of the blocks in the construction.

I’ve started a wiki page for this project at

http://michaelnielsen.org/polymath1/index.php?title=The_polynomial_Hirsch_conjecture

but it needs plenty of work, ideally by people who are more familiar with the problem than I.

Pingback: Polymath3 (polynomial Hirsch conjecture) now officially open « The polymath blog

One place to get started is to try to work out some upper and lower bounds on f(n) (the largest t for which such a configuration can occur) for small n, to build up some intuition.

I take it all the families F_i are assumed to be non-empty? Otherwise there is no bound on t because we could take all the F_i to be empty.

Assuming non-emptiness (and thus the trivial bound f(n) <= 2^n), one trivially has f(0)=1 (take F_1 to consist of just the emptyset), f(1)=2 (take F_1 = {emptyset}, F_2 = { {1} }, say), and f(2) = 4 (take F_1 = {emptyset}, F_2 = { { 1 } }, F_3 = { { 1,2} }, F_4 = { { 2 } }), if I understand the notations correctly.

So I guess the first thing to figure out is what f(3) is…

I can show f(3) can’t be 8. In that case, each of the families would consist of a single set, and one of the families, say F_i, would consist of the whole set {1,2,3}. Then the sets in F_1, …, F_{i-1} must be increasing, as must the sets in F_8, …, F_{i+1}. Only one of these sequences can grab the empty set and have length three; the other can have length at most 2. This gives a total length of 3+1+2 = 6, a contradiction.

On the other hand, one can attain f(3) >= 6 with the families {emptyset}, {{2}}, {{1,2}}, {{1}}, {{1,3}}, {{3}}.

Not sure whather f(3)=7 is true yet though.

More generally, one might like to play with the restricted function f'(n), defined as with f(n) except that each of the F_i are forced to be singleton families (i.e. they consist of just one set F_i = A_i, with the distinct). The condition (*) then becomes that

distinct). The condition (*) then becomes that  whenever

whenever  . It should be possible to compute f'(n) quite precisely. Unfortunately this does not upper bound f(n) since

. It should be possible to compute f'(n) quite precisely. Unfortunately this does not upper bound f(n) since  , but it may offer some intuition.

, but it may offer some intuition.

[Gil, if you can fix the latex in my previous comment that would be great. GK:done]

I can now rule out f(3)=7 and deduce that f(3)=6. The argument from 8:19pm shows that if one of the families contains {1,2,3}, then there must be an ascending chain to the left of that family and a descending chain to the right giving at most 6 families, a contradiction. So we can’t have {1,2,3} and so must distribute the remaining seven subsets of {1,2,3} among seven families, so that each family is again a singleton.

The sets {1}, {2}, {3} must appear somewhere; without loss of generality we may assume that {1} appears to the left of {2}, which appears to the left of {3}. Then none of the families to the left of {2} can contain a set that contains 3, and none of the families to the right can contain a set that contains 1. In particular there is nowhere for {1,3} to go and so one cannot have seven families.

Pingback: Polymath3 « Euclidean Ramsey Theory

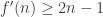

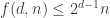

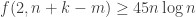

I may be making a stupid error here, but it seems that the proof of

in the previous post

can be easily modified to give

which would then give the polynomial growth bound

Indeed, if we have t families F_1, …, F_t, we let s be the largest number such that is supported in a set of size at most 2n/3, and similarly let r be the largest number such that

is supported in a set of size at most 2n/3, and similarly let r be the largest number such that  is supported in a set of size 2n/3. Then s and r are at most

is supported in a set of size 2n/3. Then s and r are at most  , and there is a set of size at least n/3 that is common to at least one member of each of the intermediate families

, and there is a set of size at least n/3 that is common to at least one member of each of the intermediate families  . Restricting to those members and then deleting the common set, it seems to me that we have

. Restricting to those members and then deleting the common set, it seems to me that we have  , which gives the claimed bound, unless I’ve made a mistake somewhere…

, which gives the claimed bound, unless I’ve made a mistake somewhere…

Are $F_{s+1},\ldots,F_{t-r}$, with the common set removed, disjoint?

The proof of $f(n) \leq f(n-1) + 2 f( n/2 )$ is incomplete as it also fails to show that the reduced intermediate families are disjoint.

It seems that the f'(n) (suggested at 8:22pm comment) are easy to figure out: Look at the first and second sets in the list, some element x is in the first but not in the second. This x can never appear again in the list of sets, so we get the recursion f'(n) <= f'(n-1)+1, and thus f'(n)<=n which can be achieved by the list of singletons. Somethings similar should apply also if we allow multisets (as in the first comment), where each time the maximum allowed multiplicity of some element decreases by 1, never to increase again.

Noam, it could be that the first set is completely contained in the second, but of course this situation cannot continue indefinitely, so one certainly gets a quadratic bound f'(n) = O(n^2) at least out of this.

Gil, I was reading the Eisenbrand-Hahnle-Razborov-Rothboss paper and they seem to have a slightly different combinatorial setup than in your problem, in which one has a graph whose vertices are d-element subsets of [n] with the property that any two vertices u, v are joined by a path consisting only of vertices that contain . Could you explain a bit more how your combinatorial setup is related to this (if it is), and what the connection to the polynomial Hirsch conjecture is?

. Could you explain a bit more how your combinatorial setup is related to this (if it is), and what the connection to the polynomial Hirsch conjecture is?

Terry, regarding the 11:32 attempt, I think that the bug is that even though the support of the prefix has a n/3 intersection with the support of the suffix this does not imply that this intersection is common to every set in the middle but rather only to the support of the middle.

Lets fix the f'(n) bound to at least f'(n)<=2n (not tight, it seems). Define f'(n,k) to be the max length you can get if the first set in the sequence has size k. So, I would claim that f'(n.k)k then we have f'(n,k) <=1 + f'(n,k')

If k=k' then we have f'(n,k) <= 1+f'(n-1,k) (since some element was removed and will never appear again

If k'<k then we have f'(n,k) <= 1+f'(n-(k-k'), k') (since at least k' were removed forever)

the last comment got garbled… i was claiming that f'(n,k)<=2n-k.

Ah, I see where I went wrong now, thanks!

(Gil: can you set the thread depth in comments from 1 to 2? This makes it easier to reply to a specific comment.)

Noam, I don’t see how one can derive f'(n) <= 2n, though I do find the bound plausible. I can get f'(n) <= n + f'(n-1) but this only gives a quadratic bound.

I did not believe I can set it, but I succeded!!!

Is it not trivial that ?

?

Take {1,…,i} for

{1,…,i} for

{(i-n+2),…,n}$ for

{(i-n+2),…,n}$ for

Take

In general, if each is a single set then the families must be convex in the “i”-direction in the sens that if an element in present in two sets then it must be present in all sets between them.

is a single set then the families must be convex in the “i”-direction in the sens that if an element in present in two sets then it must be present in all sets between them.

Well, it’s late at my end of the world so I’ll look in tomorrow again.

That should have said that the first sets are of the form {1,…,i} and the later sets of the form {i-n+1,…,n}

Ah, it looks like f'(n) = 2n for all n. To get the lower bound, look at

{}, {1}, {1,2}, {1,2,3}, …, {1,2,..,n}, {2,..,n}, {3,…,n}, …, {n}.

To get the upper bound, we follow Noam’s idea and move from each set to the next. When we do so, we add in some elements, take away some others, or both. But once we take away an element, we can never add it back in again. So each element gets edited at most twice; once to put it in, then again to take it out. (We can assume without loss of generality that we start with the empty set, since there’s no point putting the empty set anywhere else, and it never causes any harm.) This gives the bound of 2n.

I think the same argument gives f'(n,k) = 2n-k (or maybe 2n-k+1).

There is another nice way to construct a maximal sequence, we start with {}, {1},{1,2},{2},….{i,i+1},{i+1},{i+1,i+2},{i+2}…. This also gives length 2n, and uses only small sets.

A nice way to visualize this family is that we are following a hamiltonian path in a graph and alternatingly state the edges and vertices we visit.

I guess I just repeated what Klas said. 🙂

Pingback: Polymath3 now active « What’s new

It should be possible to compute f(4). We have a general lower bound which gives f(4) >= 8, and the recursion (if optimised) gives f(4) 2 and

which gives f(4) >= 8, and the recursion (if optimised) gives f(4) 2 and  (as there are not enough sets containing

(as there are not enough sets containing  to fill the intervening families, now that 1234 is out of play). I also know that without loss of generality one can take

to fill the intervening families, now that 1234 is out of play). I also know that without loss of generality one can take  (or one can simply remove the empty set family altogether and drop f(n) by one). This already eliminates a lot of possibilities, but I wasn’t able to finish the job.

(or one can simply remove the empty set family altogether and drop f(n) by one). This already eliminates a lot of possibilities, but I wasn’t able to finish the job.

Oops, wordpress ate a chunk of my previous post. Here is another attempt (please delete the older post)

It should be possible to compute f(4). We have a general lower bound which gives f(4) >= 8, and the recursion (if optimised) gives

which gives f(4) >= 8, and the recursion (if optimised) gives  . Actually I conjecture f(4)=8, after failing several times to create a 9-family sequence. What I can say is that given a 9-family sequence, one cannot have the set 1234={1,2,3,4} (as this creates an ascending chain to the left and a descending chain to the right, which leads to at most 8 families). I also know that there does not exist F_i, F_j with

. Actually I conjecture f(4)=8, after failing several times to create a 9-family sequence. What I can say is that given a 9-family sequence, one cannot have the set 1234={1,2,3,4} (as this creates an ascending chain to the left and a descending chain to the right, which leads to at most 8 families). I also know that there does not exist F_i, F_j with  that contains A, B respectively with

that contains A, B respectively with  (as there are not enough sets containing to fill the intervening families, now that 1234 is out of play). I also know that without loss of generality one can take

(as there are not enough sets containing to fill the intervening families, now that 1234 is out of play). I also know that without loss of generality one can take  (or one can simply remove the empty set family altogether and drop f(n) by one). This already eliminates a lot of possibilities, but I wasn’t able to finish the job.

(or one can simply remove the empty set family altogether and drop f(n) by one). This already eliminates a lot of possibilities, but I wasn’t able to finish the job.

I think it’s easy to show that f'(n) <2n+1. Each element i is contained in the sets [F_b_i, F_b_i+1,…,F_e_i]. So we have n intervals corresponding to n elements. Since all sets are different each set has to be either a beginning or an end of an interval, so we can't have more that 2n.

On my flight down to Stockholm this moring I thought about this problem afgain and I think I can push the lower bound up a bit.

Define and for

and for  w define

w define  . Now order the

. Now order the  lexicographically according to the indices. Unless I’ve missed something this gives a quandratic lower bound on f(n)

lexicographically according to the indices. Unless I’ve missed something this gives a quandratic lower bound on f(n)

Right away I don’t see why this can’t be done with more indices on the “ ” families. E.g. for

” families. E.g. for  take

take  and order them lexicographically. But if we do this with a number of indices which grows with n we seem to get a superpolynomial lower bound, which makes me a bit worried.

and order them lexicographically. But if we do this with a number of indices which grows with n we seem to get a superpolynomial lower bound, which makes me a bit worried.

Now it’s time for me to pick up my luggage and travel on.

As stated earlier this example does not work because it violates the disjointness condition in the definition of the problem.

Here’s an alternative perspective on the problem. Take an n-dimensional cube, its vertices corresponding to sets. Now color a subset of its vertices using the integers such that for certain faces F one has the constraint:

(*) The colors in F have to be contiguous subset of integers.

What is the maximum number of colors that such a coloring can have?

If the set of faces on which (*) has to hold is the set of all faces containing a designated special vertex (corresponding to {1,2,…,n}), then this is just a reformulation of the original problem.

On the other extreme, if (*) has to hold for all faces, then the maximum number of colors is n+1:

Restrict to the smallest face containing both the min and max color vertex. (u and v respectively) If this face contains no other colored vertex, one is done. Otherwise, take a third vertex w and recurse on the minimum faces containing u and w, and w and v, respectively.

Can one say anything about the case where the faces on which (*) has to hold is somewhere between those two extremes?

I like this alternate perspective, but I don’t quite see why it’s a reformulation of the original problem when you consider faces that contain a designated vertex. It seems to me that this colouring version of the problem corresponds to following constraint in the original problem: for each $i$< $j$< $k$ and for each $a$, if $a \in S \in F_i$ and $a \in T \in F_k$ for some $S$ and $T$, then there exists $U \in F_j$ with $a \in U$. And this doesn’t seem quite the same as the original constraint; e.g., $F_1 = \{\{1,2,3\}\}$, $F_2 = \{\{2\}, \{3\}\}$, $F_3 = \{\{2,3,4\}\}$ seems to satisfy the colouring constraint but not the original constraint.

Perhaps I’ve not understood things properly though…

Nicolai meant faces of any dimension containing the designated vertex. You took only faces of co-dimension 1. Hence the discrepancy.

In the following I try to generalize the idea of Terence Tao that you cannot

have a big set (the full set in his case) in a family. I hope proof is right and

that I did not mess up with the constants.

Let $F_1,..,F_l$ be a sequence of disjoint families of sets over $[n]$ which satisfy condition (*).

Say that $\{1,\dots,n-k\} \in F_l$, we prove that $l \leq (n-k+1) f(k)$.

Let $S_i \in F_i$ and write $A_i$ for its restriction to $[n-k]$.

Because of condition (*), we have a sequence of sets $S_1,\dots,S_l$ such that

the sequence $A_1,\dots,A_l$ is increasing (non necessarily strictly) (you build it as in the case of one set by family).

Moreover, we can choose each $A_i$ to be maximal for the restriction of the sets of $F_i$ to $[n-k]$.

Let $A_j$ the first set of size $s$ and let $A_w+1$, the first set of size strictly greater than $s$.

We have $A=A_j=A_{j+1} = \dots = A_{w}$.

Consider now the restriction of $F_j,\dots,F_{w}$ to elements containing $A$:

we remove $A$ from these elements and we remove the others entirely.

We have a sequence of disjoint families $F’_{j},\dots,F’_{w}$ over $[n] \setminus A$ which satisfy

(*). Since $A$ has been chosen to be maximal, the families $F’_{j},\dots,F’_{w}$ contains only sets over $\{n-k+1,\dots,n\}$.

Therefore $w-j+1 \leq f(k)$. Since there are at most $n-k+1$ possible sizes of sets $A_i$,

we have $l \leq (n-k+1) f(k)$.

Therefore if there is a set of size $k$ in one of the family $F_i$ in a sequence $F_1,\dots,F_t$,

$t \leq (2 (n-k)+1) f(k)$.

This idea is an attempt to give a different upper bound to $f(n)$

and maybe to obtain $f(4)=8$.

For $n=4$ and $k=1$, that is $\{1,2,3\}$ appears in a family,

we have $t \leq (2(4-1)+1)(f(1)-1)=7$ : it rules out this case.

For $n = 4$ and $k=2$, that is $\{1,2\}$ appears in a family, we have $t \leq (2(4-2)+1)(f(2)-1) = 15$

and it does not give anything interesting.

We could try to improve this result by using the constraints

between sequences of the form $A_j,\dots,A_w$.

I’m trying to concentrate on the case where the support of each family is the entire set [n]. I think it suffices to establish the polynomial estimate for this case since, in the generic case, the support can only change 2n-1 times.

For the case of 4 I seem to have found the chain of length 6 : {{1},{2},{3},{4}}, {{23},{13},{24}}, {{123},{234}}, {{1234}},{{134,124}},{{14,12,34}} and I’m pretty convinced there’s no chain of length 7.

I think I can show f(4) is less than 11.

Assume we have 11 or more elements then 8 types of

sets in terms of which of the the first three elements are

in the set.

We must have a repetition of the same type in

two different families.

Then every set must contain an element

that contains the 3 elements of the repetition.

Now if the repetition is not null there can be

at most 8 elements that contain the repetition

but we have 9 families besides A and B and so there is a contradiction.

Now we can repeat this argument for each set of

four elements. so we have at most 5 families containing the

null set and each single element. And we have adding

one element not in a set in a family and having the resulting

augmented set outside the family is forbidden.

so outside of the 5 sets that contain

the singleton elements and the null set there are no

two element sets, no single element sets

and no null set. but that leaves 5 elements for 6

sets which gives a contradiction. So f(4) cannot be 11.

Apologies if I ask questions that are answered in earlier comments or earlier discussion posts on this problem. It occurred to me that if we are trying to investigate sequences of set systems such that for every

such that for every  a certain property holds, then it might be interesting to try to understand what triples with that property can be like. That is, suppose you have three families

a certain property holds, then it might be interesting to try to understand what triples with that property can be like. That is, suppose you have three families  of subsets of

of subsets of ![[n]](https://s0.wp.com/latex.php?latex=%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) such that no set belongs to more than one family, and suppose that for every

such that no set belongs to more than one family, and suppose that for every  and every

and every  there exists

there exists  such that

such that  What can these families be like?

What can these families be like?

At this stage I have so little intuition about the problem that I’d be happy with a few non-trivial examples, whether or not they are relevant to the problem itself. To set the bar for non-triviality, here’s a trivial example: insist that all sets in are contained in

are contained in ![[1,s]](https://s0.wp.com/latex.php?latex=%5B1%2Cs%5D&bg=ffffff&fg=333333&s=0&c=20201002) and not contained in

and not contained in ![[r,s]](https://s0.wp.com/latex.php?latex=%5Br%2Cs%5D&bg=ffffff&fg=333333&s=0&c=20201002) (where

(where  ), that all sets in

), that all sets in  are contained in

are contained in ![[r,n]](https://s0.wp.com/latex.php?latex=%5Br%2Cn%5D&bg=ffffff&fg=333333&s=0&c=20201002) but not in

but not in ![[r,s],](https://s0.wp.com/latex.php?latex=%5Br%2Cs%5D%2C&bg=ffffff&fg=333333&s=0&c=20201002) and that

and that  contains the set

contains the set ![[r,s].](https://s0.wp.com/latex.php?latex=%5Br%2Cs%5D.&bg=ffffff&fg=333333&s=0&c=20201002)

Now any example that looks anything like that is fairly hopeless for the problem because if you have a parameter that “glides to the right” as the family “glides to the right” then it can take at most values, so there can be at most

values, so there can be at most  families.

families.

Let me ask a slightly more precise question. Are there good examples of triples of families where the middle family has several sets, and needs to have several sets?

Actually, I’ve thought of an example, but it’s somehow trivial in a different way. Let be a fairly large collection of random sets and let

be a fairly large collection of random sets and let  be another fairly large collection of random sets, chosen to be disjoint. (That is, choose a large collection of random sets and then randomly partition it into two families.) Now let

be another fairly large collection of random sets, chosen to be disjoint. (That is, choose a large collection of random sets and then randomly partition it into two families.) Now let  consist of all sets

consist of all sets  such that

such that  and

and  Then trivially it has the property we want, and since with high probability the sets in

Then trivially it has the property we want, and since with high probability the sets in  have size around

have size around  and the sets in

and the sets in  and

and  have size around

have size around  the three families are disjoint.

the three families are disjoint.

This raises another question. Is there a useful sense in which this second example is trivial? (By “useful sense” I mean a sense that shows that a random construction like this couldn’t possibly be used to create a counterexample to the combinatorial version of the polynomial Hirsch conjecture.)

Another weirdness is that my last comment has appeared before some comments that were made several hours earlier.

Let me think briefly about random counterexamples. Basically, I have an idea for such an example and I want to check that it doesn’t work.

The idea is this. If you take a random collection of sets of size then as long as it is big enough its lower shadow at the layer

then as long as it is big enough its lower shadow at the layer  will be full. (By that I mean that every set of size

will be full. (By that I mean that every set of size  will be contained in one of the sets in the collection.) Also, as long as it is small enough, the intersection of any two of the sets will have size about

will be contained in one of the sets in the collection.) Also, as long as it is small enough, the intersection of any two of the sets will have size about  I can feel this not working already, but let me press on. If we could get both properties simultaneously, then we could just take a whole bunch of random set systems consisting of sets of size

I can feel this not working already, but let me press on. If we could get both properties simultaneously, then we could just take a whole bunch of random set systems consisting of sets of size  Any two sets in any two of the collections would have small intersection and would therefore be contained in at least one set from each collection. This is of course a much much stronger counterexample than is needed, since it dispenses with the condition

Any two sets in any two of the collections would have small intersection and would therefore be contained in at least one set from each collection. This is of course a much much stronger counterexample than is needed, since it dispenses with the condition  So obviously it isn’t going to work.

So obviously it isn’t going to work.

[Quick question: does anyone have a proof that you can’t have too many disjoint families of sets such that any three families in the collection have the property we are talking about? Presumably this is not hard.]

But in any case it’s pretty obvious that if you’ve got enough sets to cover all sets of size then you’re going to have to have some intersections that are a bit bigger than that.

then you’re going to have to have some intersections that are a bit bigger than that.

Nevertheless, let me do a quick calculation in case it suggests anything. If I want to choose a random collection of sets of size in such a way that all sets of size

in such a way that all sets of size  are covered, then, crudely speaking, I need the probability of choosing a given set of size

are covered, then, crudely speaking, I need the probability of choosing a given set of size  to be the reciprocal of the number of

to be the reciprocal of the number of  -sets containing any given

-sets containing any given  -set. That is, I need to take a probability of

-set. That is, I need to take a probability of  That’s actually the probability that means that the expected number of sets in the family that contain any given

That’s actually the probability that means that the expected number of sets in the family that contain any given  -set is 1, which isn’t exactly right but gives the right sort of idea. So if

-set is 1, which isn’t exactly right but gives the right sort of idea. So if  and

and  then we get that the number of sets is

then we get that the number of sets is

Hmm, the other probability I wanted to work out was the probability that the intersection of two sets of size has size substantially different from

has size substantially different from  In that way, I wanted to work out how many sets you could pick with no two having too large an intersection. If that was bigger than the size above then it would be quite interesting, but of course it won’t be.

In that way, I wanted to work out how many sets you could pick with no two having too large an intersection. If that was bigger than the size above then it would be quite interesting, but of course it won’t be.

Quick question: does anyone have a proof that you can’t have too many disjoint families of sets such that any three families in the collection have the property we are talking about? Presumably this is not hard.

The elementary argument kills this off pretty quickly and gives a bound of in this case. Indeed, the case n=1 follows from direct inspection, and for larger n, once one has t families for some

in this case. Indeed, the case n=1 follows from direct inspection, and for larger n, once one has t families for some  , two of them have a common element, say n; then the other t-2 families must have sets that contain n. Now restrict to those sets that contain n, then delete n, and we get from induction hypothesis that

, two of them have a common element, say n; then the other t-2 families must have sets that contain n. Now restrict to those sets that contain n, then delete n, and we get from induction hypothesis that  , and the claim follows.

, and the claim follows.

A rather general question is this. A basic problem with trying to find a counterexample is that the linear ordering on the families makes it natural to try to associate each family with … something. But what? With a ground set of size using the ground set is absolutely out. So we need to create some other structure. Klas tried this above, with the set

using the ground set is absolutely out. So we need to create some other structure. Klas tried this above, with the set  I vaguely wonder about something geometric, but I start getting the problem that if one has a higher-dimensional structure (in order to get more points) then one still has to find a nice one-dimensional path through it. Maybe something vaguely fractal in flavour would be a good idea. (Please don’t ask me to say more precisely what I mean by this …)

I vaguely wonder about something geometric, but I start getting the problem that if one has a higher-dimensional structure (in order to get more points) then one still has to find a nice one-dimensional path through it. Maybe something vaguely fractal in flavour would be a good idea. (Please don’t ask me to say more precisely what I mean by this …)

I am sorry about the wordpress strange behavior.

For improved lower bounds: Considering random examples for our families is appealing. One general “sanity test” (suggested by Jeff Kahn) that is rather harmfull to some random suggestion is: “Check what the proof does for the example.” Often when you look at such an example then the union of the sets in the first very few families will be everything and also in the very few last families. And this looks to be the case also when you restrict yourself to sets containing certain elements. This “sanity check” does not kill every random example but it is useful.

For improved upper bounds: In the present proof in order to reach a set R from the first r families and a set T from the last r families so that R and T share k elements we can only guarantee that for

r = f(n/2) + f((n-1)/2)+ f((n-2)/2))+…+ f((n-k)/2).

Somehow it looks that when k is large we can do better. And that we do not have to waste so much effort further down in the recursion.

In particular we reach the same set in the first r families and last r families for r around roughly nf(n/2) it would be nice to improve it.

I think I can show f(4) is less than 10. We have it

must be less than 11 previously.

Assume we have 10 or more elements then there are 8 types of

sets in terms of which of the the first three elements are

in the set. We must have a repetition of the same type in

sets in two different families.

Then every set must contain an element

that contains the 3 elements of the repetition.

Now if the repetition is not null there can be

at most 8 elements that contain the repetition

but we have 8 families besides A and B and so there is a contradiction.

But we can improve this since we have two instances of the repetition

in the first two elements so we have at most 6 unused elements.

Now we can repeat this argument for each set of

four elements. so we have at most 5 families containing the

null set and each single element. And we have adding

one element not in a set in a family and having the resulting

augmented set outside the family is forbidden.

so outside of the 5 sets that contain

the singleton elements and the null set there are no

two element sets, no single element sets

and no null set. but that leaves 5 elements for 5

sets. This means that each family must contain one of the

sets with more than two elements. In particular one must

contain the set with four elements and one a set with three

elements. Then since their intersection will have three elements

every family must have a set with three elements but there are not

enough sets with three elements to go around.

sets which gives a contradiction. So f(4) cannot be more than 9.

Gil, could you remind us what is known about the case d=2? From your previous blog post I understand that f(2,n)=O(n log^2 n), while the only construction I can see is f(2,n) is of length n-1. Are these the best that is known?

I think that the following argument establishes an O(nlogn) upper bound for f(2,n): Define the “prefix-support” of an index i to be the support of all sets in all F_j for ji. Now let us ask whether there exists some i in the middle third (t/3 <i < 2t/3) such that the intersection of the prefix-support and the suffix-support of i is less than k=n/log(n). If not, then every F_i in the middle third must have at least n/(2k) pairs in it so then t/3 is bounded by the the total number of pairs (n choose 2) divided by n/(2k), which gives an O(nlogn) bound on t (for k=n/logn). Otherwise, fix such i, and let m be the size of of the prefix-support, so the size of the suffix-support is at most n-m+k, and we get the recursion , which (I think) solves to O(nlogn) for k=n/logn when taking into account that m=theta(n).

, which (I think) solves to O(nlogn) for k=n/logn when taking into account that m=theta(n).

WordPress ate part of the definitions in the beginning: the prefix-support of i is the support of $latex \cup_{ji} F_j$.

WordPress ate the LaTex again, so lets try verbally: the prefix-support is the combined support of all set systems that come before i, while suffix-support is the support of all sets that come after i.

OK, this was not only garbled by wordpress but also by myself and is quite confused and with typos. I think it still works, and will try to write a more coherent version later…

As another attempt to gain intuition, I want to have a quick try at a just-do-it construction of a collection of families satisfying the given condition. Let’s call the families I’m trying (with no hope of success) to construct

The pair of families with most impact on the other families is so let me start by choosing those so as to make it as easy as possible for every family in between to cover all the intersections of sets in

so let me start by choosing those so as to make it as easy as possible for every family in between to cover all the intersections of sets in  and sets in

and sets in  Before I do that, let me introduce some terminology (local to this comment unless others like it). I’ll write

Before I do that, let me introduce some terminology (local to this comment unless others like it). I’ll write  for the “pointwise intersection” of

for the “pointwise intersection” of  and

and  by which I mean

by which I mean  And if

And if  and

and  are set systems I’ll say that

are set systems I’ll say that  covers

covers  if for every

if for every  there is some

there is some  such that

such that  Then the condition we want is that

Then the condition we want is that  covers

covers  whenever

whenever

If we want it to be very easy for the families with

with  to cover

to cover  then the obvious thing to do is make

then the obvious thing to do is make  and

and  as small as possible and to make the intersections of the sets they contain as small as possible as well. Come to think of it (and although this must have been mentioned several times, it is only just registering with me) it’s clear that WLOG there is just one set in

as small as possible and to make the intersections of the sets they contain as small as possible as well. Come to think of it (and although this must have been mentioned several times, it is only just registering with me) it’s clear that WLOG there is just one set in  and one set in

and one set in  because making those families smaller does not make any of the conditions that have to be satisfied harder.

because making those families smaller does not make any of the conditions that have to be satisfied harder.

A separate minimality argument also seems to say that WLOG not only are and

and  singletons, but they are “hereditarily” singletons in that their unique elements are singletons. Why? Well, if

singletons, but they are “hereditarily” singletons in that their unique elements are singletons. Why? Well, if  then removing an element from

then removing an element from  does not make anything harder (because

does not make anything harder (because  is not required to cover anything) and makes all covering conditions involving

is not required to cover anything) and makes all covering conditions involving  easier (because sets in the intermediate

easier (because sets in the intermediate  cover a proper subset of

cover a proper subset of  if they cover

if they cover  ). In fact, we could go further and say that

). In fact, we could go further and say that  and that

and that  for some

for some  but I don’t really like that because we can play the empty-set trick only once and it destroys the symmetry. So I’m going to ban the empty set for the purposes of this argument.

but I don’t really like that because we can play the empty-set trick only once and it destroys the symmetry. So I’m going to ban the empty set for the purposes of this argument.

So now and

and  for some

for some  The next question is whether

The next question is whether  should or should not equal

should or should not equal  This is no longer a WLOG I think, because if

This is no longer a WLOG I think, because if  then it makes it easier for intermediate families to cover

then it makes it easier for intermediate families to cover  (they do automatically) but when we put in

(they do automatically) but when we put in  it means that

it means that  and

and  are (potentially) different sets, so there is more to keep track of. But a further

are (potentially) different sets, so there is more to keep track of. But a further  will either be earlier than

will either be earlier than  and not care about

and not care about  or later and not care about

or later and not care about  so my instinct is that it is better to make

so my instinct is that it is better to make  (And since this is a greedyish just-do-it, there is no real harm in imposing some minor conditions like this as we go along.)

(And since this is a greedyish just-do-it, there is no real harm in imposing some minor conditions like this as we go along.)

Since this comment is getting quite long, I’ll continue in a new one rather than risk losing the whole lot.

This comment continues from this one.

There is now a major decision to be made: which family should we choose next? Should it be one of the extreme families — without loss of generality — or should we try a kind of repeated bisection and go for a family right in the middle? Since going for a family right in the middle doesn’t actually split the collection properly in two (since the two halves will have plenty of constraints that affect both at the same time) I think going for an extreme family is more natural. So let’s see whether we can say anything WLOG-ish about

— or should we try a kind of repeated bisection and go for a family right in the middle? Since going for a family right in the middle doesn’t actually split the collection properly in two (since the two halves will have plenty of constraints that affect both at the same time) I think going for an extreme family is more natural. So let’s see whether we can say anything WLOG-ish about

I now see that I said something false above. It is not true that the unique set in is WLOG a singleton, because there is one respect in which that makes life harder: since the

is WLOG a singleton, because there is one respect in which that makes life harder: since the  are disjoint we cannot use that singleton again. So let us stick with the decision to choose singletons but bear in mind that we did in fact lose generality (but in a minor way, I can’t help feeling).

are disjoint we cannot use that singleton again. So let us stick with the decision to choose singletons but bear in mind that we did in fact lose generality (but in a minor way, I can’t help feeling).

I also see that I said something very stupid: I was wondering whether it was better to take or

or  but of course taking

but of course taking  was forbidden by the disjointness condition.

was forbidden by the disjointness condition.

The reason I noticed the first mistake was that that observation seemed to iterate itself. That is, if we think greedily, then we’ll want to make be of the form

be of the form  and so on, and we’ll quickly run out of singletons.

and so on, and we’ll quickly run out of singletons.

So the moral so far is that if we are greedy about making it as easy as possible for to cover

to cover  whenever

whenever  then we make the disjointness condition very powerful.

then we make the disjointness condition very powerful.

Since that is precisely the sort of intuition I was hoping to get from this exercise, I’ll stop this comment here. But the next plan is to try once again to use a greedy algorithm, this time with a new condition that will make the disjointness condition less powerful. Details in the next comment, which I will start writing immediately.

It seems that my <a href="https://gilkalai.wordpress.com/2010/09/29/polymath-3-polynomial-hirsch-conjecture/#comment-3448"previous comment went somewhat back in history.

Sorry my previous post was awful since wordpress does not understand latex directly. I try to add latex markups, I hope it is the right thing to do. The computations on examples were false

because I use something I did not establish.

Now let’s try to improve a bit what I have said. into levels: we have a sequence of sets

into levels: we have a sequence of sets  such that the intersection of S_i with {1,…,n-k} is constant on a level.

such that the intersection of S_i with {1,…,n-k} is constant on a level.

The idea is that if as big set {1,…,n-k} appears in a family,

we can decompose the sequence of families

Moreover each level is of size f(k) at most.

We can improve on that a bit to try to compute the first values of f.

Let g(n,m) the maximum size of the sequences of disjoint families satisfying (*) such that only sets of size at most m appears in the families. We have g(n,n) = f(n) and g(n,.) is incresaing.

Assume now there is a set of size n-k but no larger one in a sequence of t families. Then we can bound t by

Consider now the case where we have a set of size n-1 but not of size n. .

.

We can see that the first and last levels have only two sets to share therefore we can say wlog that the first level is of size 2 and the last of size 0. By a bit of case study

I think I can prove that there is at most two levels with two families.

Therefore we have that the size of such a sequence is bounded

by 1 + 2*2 (the two levels of size two) + 2(n-1)-3 (the last level is removed as well as the two of size 2).

So if there is a set of size n-1 (but not the one of siz n),

the size of the sequence is at most 2n. Moreover, one can find

such a sequence of size 2n:

Well, now that really rules out the case of a set of size 3, but no of size 4 in a sequence built over {1,2,3,4}.

Therefore to prove f(4)=8, one has only to look at a sequence of families containing only pairs. That is computing g(4,2) and it must not be that hard.

Before starting this comment I took a look back and saw that I had missed Gil’s discussion of Well, my basic thought now is that since a purely greedy algorithm encourages one to look at

Well, my basic thought now is that since a purely greedy algorithm encourages one to look at  which is a bit silly, it might be a good idea to try to apply a greedy algorithm to the question of lower bounds for

which is a bit silly, it might be a good idea to try to apply a greedy algorithm to the question of lower bounds for

I’ll continue with the notation I suggested in this comment.

As before, it makes sense for and

and  to be singletons (where by “makes sense” I mean that WLOG this is what happens). Now of course by “singleton” I mean “set of size

to be singletons (where by “makes sense” I mean that WLOG this is what happens). Now of course by “singleton” I mean “set of size  “. Actually, since this is just a start, let’s take

“. Actually, since this is just a start, let’s take  and try to get a superlinear lower bound (which does in fact exist according to an earlier post of Gil’s, according to Noam Nisan above).

and try to get a superlinear lower bound (which does in fact exist according to an earlier post of Gil’s, according to Noam Nisan above).

So now and

and  The first question is whether we should take

The first question is whether we should take  and

and  to be disjoint or to intersect in a singleton. It seems pretty clearly better to make them disjoint, so as to minimize the difficulties later.

to be disjoint or to intersect in a singleton. It seems pretty clearly better to make them disjoint, so as to minimize the difficulties later.

Now what? Again, let us go for next. Here’s an argument that I find quite convincing. Since every set system covers

next. Here’s an argument that I find quite convincing. Since every set system covers  WLOG

WLOG  is a singleton

is a singleton  (Let’s also write

(Let’s also write  and

and  ) Now we care hugely about

) Now we care hugely about  because there are lots of intermediate

because there are lots of intermediate  and not at all about

and not at all about  Oh wait, that’s false isn’t it, because for each

Oh wait, that’s false isn’t it, because for each  we need

we need  to cover

to cover  So it’s not even obvious that we want

So it’s not even obvious that we want  to be a singleton. Indeed, if

to be a singleton. Indeed, if  and

and  then all sets in all

then all sets in all  must either be disjoint from

must either be disjoint from  or must intersect it in

or must intersect it in  That tells us that the element 1 is banned, and banning elements is a very bad idea because you can do it at most

That tells us that the element 1 is banned, and banning elements is a very bad idea because you can do it at most  times.

times.

On the other hand, if we keep insisting that we mustn’t ban elements, that is going to be a problem as well, so there seem to be two conflicting pressures here.

For now, however, I’m going to go with not banning elements. So that tells us that, in order not to restrict the later too much, it would be a good idea if

too much, it would be a good idea if  contains at least one set that contains

contains at least one set that contains  and at least one set that contains

and at least one set that contains  But in order to do that in a “minimal” way, let us take

But in order to do that in a “minimal” way, let us take  where I am now abbreviating

where I am now abbreviating  by

by  I am of course also thinking of the

I am of course also thinking of the  as graphs, so let me make that thought explicit: we want a sequence of graphs

as graphs, so let me make that thought explicit: we want a sequence of graphs  such that no two of the graphs share an edge, and such that if

such that no two of the graphs share an edge, and such that if  and

and  and

and  contain edges that meet at a vertex, then

contain edges that meet at a vertex, then  must also contain an edge that meets at that vertex.

must also contain an edge that meets at that vertex.

Ah — rereading Noam Nisan’s question I now see that the superlinear bound he was referring to was an upper bound. So it could be that obtaining a lower bound even of would be interesting.

would be interesting.

So now we have chosen the following three graphs: Let’s assume that

Let’s assume that  and

and  but write it

but write it  because it is harder to write

because it is harder to write

What constraint does that place on the remaining graphs ? Since no set in

? Since no set in  or

or  intersects any set in

intersects any set in  it is not hard for

it is not hard for  to cover

to cover  and

and  So we just have to worry about

So we just have to worry about  covering

covering  And we have made sure that that happens automatically, since

And we have made sure that that happens automatically, since  covers all possible intersections with a set in

covers all possible intersections with a set in  (This is another sort of greed that is clearly too greedy.) So the only constraint comes from the disjointness condition: every edge in

(This is another sort of greed that is clearly too greedy.) So the only constraint comes from the disjointness condition: every edge in  must contain a vertex outside the set

must contain a vertex outside the set  since we have used up the edges

since we have used up the edges

Now let’s think about (It’s no longer clear to me that we wanted to decide

(It’s no longer clear to me that we wanted to decide  at the beginning — let’s keep an open mind about that.)

at the beginning — let’s keep an open mind about that.)

The obvious analogue of what we did when choosing and

and  is to set

is to set  But this is starting to commit ourselves to a pattern that we don’t want to continue, since it stops at

But this is starting to commit ourselves to a pattern that we don’t want to continue, since it stops at  (But let me check whether it works. If

(But let me check whether it works. If  is the set of all pairs with maximal element

is the set of all pairs with maximal element  then if

then if  and an edge in

and an edge in  meets an edge in

meets an edge in  then those two edges must either be of the form

then those two edges must either be of the form  or of the form

or of the form  for some

for some  So they intersect in some

So they intersect in some  which means that their intersection is covered by the edge

which means that their intersection is covered by the edge  )

)

If we decide at some point to break the pattern, then what happens? Again, this comment is getting long so I’ll start a new one.

This is a continuation of my previous comment.

Let us experiment a bit and try That is, in order to break a pattern that we need to break sooner or later, let us miss out

That is, in order to break a pattern that we need to break sooner or later, let us miss out  from

from  and explore the implications for future

and explore the implications for future  For convenience I am now not going to assume that we have chosen

For convenience I am now not going to assume that we have chosen  my greedy (but I hope not overgreedy) algorithm just chooses the families in the obvious order.

my greedy (but I hope not overgreedy) algorithm just chooses the families in the obvious order.

What condition does this impose on future ? Any intersection with

? Any intersection with  will be

will be  or

or  so it will be covered by

so it will be covered by  and

and  So that’s fine. But the fact that we have missed out

So that’s fine. But the fact that we have missed out  from

from  means that we can’t afford any edge that contains the vertex 3, since that will intersect an edge in

means that we can’t afford any edge that contains the vertex 3, since that will intersect an edge in  in the vertex 3, and will then not be covered by

in the vertex 3, and will then not be covered by  That means that the best our lower bound for

That means that the best our lower bound for  can be is

can be is  and it probably can’t even be that. So this is bad news if we are going for a superlinear lower bound.

and it probably can’t even be that. So this is bad news if we are going for a superlinear lower bound.

Let’s instead try which is genuinely different example. Can any edge in

which is genuinely different example. Can any edge in  contain 1? If it does, then … OK, it obviously can’t, so once again we’re in trouble.

contain 1? If it does, then … OK, it obviously can’t, so once again we’re in trouble.

If we don’t ban vertices, then what are our options? Let’s try to understand the general circumstance under which a vertex gets banned. Suppose that a vertex is used in some

is used in some  and not in

and not in  , where

, where  Then that vertex cannot be used in

Then that vertex cannot be used in  if

if  for the simple reason that then

for the simple reason that then  and

and  is not covered by

is not covered by

So let be the set of all vertices used by

be the set of all vertices used by  (or equivalently the union of the sets in

(or equivalently the union of the sets in  ). We have just seen that if

). We have just seen that if  and

and  then

then  So a preliminary question we should ask is whether we can find a nice long sequence of sets

So a preliminary question we should ask is whether we can find a nice long sequence of sets  satisfying this condition.

satisfying this condition.

The answer is potentially yes, since there is no reason to suppose that the sets are distinct. But let us first see what happens if they are distinct. I think there should be a linear upper bound on their number.

are distinct. But let us first see what happens if they are distinct. I think there should be a linear upper bound on their number.

Let be a sequence of distinct sets with this property. Let us assume that the ground set is ordered in such a way that each new element that is added is as small as possible.

be a sequence of distinct sets with this property. Let us assume that the ground set is ordered in such a way that each new element that is added is as small as possible.

Hmm, I don’t think that helps. But how about this. For each element of the ground set, the condition tells us that the set of

of the ground set, the condition tells us that the set of  such that

such that  is an interval

is an interval  So we ought to be able to rephrase things using those intervals. The question becomes this: let

So we ought to be able to rephrase things using those intervals. The question becomes this: let  be a collection of subintervals of

be a collection of subintervals of ![[m].](https://s0.wp.com/latex.php?latex=%5Bm%5D.&bg=ffffff&fg=333333&s=0&c=20201002) For each

For each ![i\in[m]](https://s0.wp.com/latex.php?latex=i%5Cin%5Bm%5D&bg=ffffff&fg=333333&s=0&c=20201002) let

let  How big can

How big can ![[m]](https://s0.wp.com/latex.php?latex=%5Bm%5D&bg=ffffff&fg=333333&s=0&c=20201002) be if all the sets

be if all the sets  are distinct?

are distinct?

That’s much better, since it tells us that between each and

and  some interval

some interval  must either just have started or just have finished. So we get a bound of

must either just have started or just have finished. So we get a bound of  (Perhaps it’s actually

(Perhaps it’s actually  but I can’t face thinking about that.)

but I can’t face thinking about that.)

This suggests to me a construction, but once again this comment is getting a bit long so I’ll start a new one.

I’ve just been reading properly some of the earlier comments and noticed that the argument I gave in the third-to-last paragraph is essentially the argument that Noam and Terry came up with to bound the function

I want to try out a construction suggested by the thoughts at the end of this comment, though it may come to nothing.

The basic idea is this. Let’s define our sets as follows. We begin with a collection of increasing intervals

as follows. We begin with a collection of increasing intervals ![[1,i]](https://s0.wp.com/latex.php?latex=%5B1%2Ci%5D&bg=ffffff&fg=333333&s=0&c=20201002) and then when we reach

and then when we reach ![[1,n]](https://s0.wp.com/latex.php?latex=%5B1%2Cn%5D&bg=ffffff&fg=333333&s=0&c=20201002) we continue with

we continue with ![[2,n],[3,n],\dots,\{n\}.](https://s0.wp.com/latex.php?latex=%5B2%2Cn%5D%2C%5B3%2Cn%5D%2C%5Cdots%2C%5C%7Bn%5C%7D.&bg=ffffff&fg=333333&s=0&c=20201002) These sets have the property that if

These sets have the property that if  and

and  then

then

Now I want to define for each a graph

a graph  with vertex set

with vertex set  (except that strictly speaking

(except that strictly speaking  has vertex set

has vertex set ![[n]](https://s0.wp.com/latex.php?latex=%5Bn%5D&bg=ffffff&fg=333333&s=0&c=20201002) and the vertices outside

and the vertices outside  are isolated). I want these

are isolated). I want these  to have the following two properties. First,

to have the following two properties. First,  is a vertex cover of

is a vertex cover of  that is, all the vertices in

that is, all the vertices in  are used. Secondly, the

are used. Secondly, the  are edge-disjoint. Suppose we have these two conditions. Then if

are edge-disjoint. Suppose we have these two conditions. Then if  then

then  consists of vertices that belong to

consists of vertices that belong to  and hence to

and hence to  and they are then covered by

and they are then covered by

The most economical vertex covers will be perfect matchings, though that causes a slight problem if is odd. But maybe we can cope with the extra vertices somehow.

is odd. But maybe we can cope with the extra vertices somehow.

I think that so far these conditions may be too restrictive. Indeed, for a trivial reason we can’t cover so we should have started with

so we should have started with  But if we do that, then

But if we do that, then  is forced to be

is forced to be  which means that

which means that  is forced to contain

is forced to contain  and all sorts of other decisions are pretty forced as well.

and all sorts of other decisions are pretty forced as well.

So the extra idea that might conceivably help (though it is unlikely) is to start with for some small

for some small  That might give us enough flexibility to make the choices we need to make and obtain a lower bound of

That might give us enough flexibility to make the choices we need to make and obtain a lower bound of  However, I don’t rule out some simple counting argument showing that we use up the edges in some

However, I don’t rule out some simple counting argument showing that we use up the edges in some  too quickly.

too quickly.

Looking at n=4, here’s a nontrivial (better than n-1) sequence for f(2,n): {12}, {23,14}, {13,24}, {34}.

I think that this generalizes for general n: at the beginning put a recursive sequence on the first n/2 elements; at the end put a recursive sequence on the last n/2 elements; now at the middle we’ll put n/2 set systems where each of these has exactly n/2 pairs, where each pair has one element from the first n/2 elements and another from the last n/2 elements. (There are indeed n/2 such disjoint matchings in the bipartite graph with n/2 vertices on each side.) The point is that the support on all set systems in the middle is complete, so they trivially contain any intersection of two pairs.

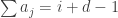

If this works the we get the recursion f(2,n) \ge 2f(2,n/2) + n/2, which solves at Omega(n log n).

Noam, I don’t think this construction works. The problem is that the recursive sequences at either end obey additional constraints coming from the stuff in the middle. For instance, because the support on all the middle stuff is complete, the supports on the first recursive sequence have to be monotone increasing, and the supports on the second recursive sequence have to be monotone decreasing. (One can already see this if one tries the n=8 version of the construction.)

I discovered this by reading [AHRR] and discovering that they had the bound , or in this case

, or in this case  . I’ll put their argument on the wiki.

. I’ll put their argument on the wiki.

Is it known if 2n is the right bound here?

The best construction I have been able to do is this:

Start by dividing 1….n into n/2 disjoint pairs {1,2}{3,4},…,{i,i+1},…

next insert the following families between {a,b} and {c,d}:

{{a,c},{b,d}} , {{a,d},{b,c}}

This gives a family of size 1+3(n/2-1) and I have not been able to improve it for, very, small n

{a,b} and {c,d} should be consecutive pairs in the first sequence.

Ahh, I see. Indeed broken. Thanks.

That looks convincing (this is a reply to Noam Nisan’s comment which should appear just before this one but may not if WordPress continues to act strangely). It interests me to see how it fits with what I was writing about, so let me briefly comment on that. First of all, let’s think about the sequence of s. I’ll write what the sequence is in the case

s. I’ll write what the sequence is in the case  It is 12,1234,34,12345678,56,5678,78. If we condense the pairs to singletons it looks like this: 1, 12, 2, 1234, 3, 34, 4. We can produce this sequence by drawing a binary tree in a natural way and then visiting its vertices from left to right. We label the leaves from 1 to

It is 12,1234,34,12345678,56,5678,78. If we condense the pairs to singletons it looks like this: 1, 12, 2, 1234, 3, 34, 4. We can produce this sequence by drawing a binary tree in a natural way and then visiting its vertices from left to right. We label the leaves from 1 to  and we label vertices that appear higher up by the set of all the leaves below them.

and we label vertices that appear higher up by the set of all the leaves below them.

The gain from to

to  comes from the fact that these sets appear with a certain multiplicity: the multiplicity of a set

comes from the fact that these sets appear with a certain multiplicity: the multiplicity of a set  in the sequence is proportional to the size of that set.

in the sequence is proportional to the size of that set.

By the way, is the following argument correct? Let be a general system of families satisfying the condition we want. For each

be a general system of families satisfying the condition we want. For each  let

let  be the union of all the sets in

be the union of all the sets in  Then if

Then if  and

and  it follows that

it follows that  (Otherwise just pick a set in

(Otherwise just pick a set in  and a set in

and a set in  that both contain

that both contain  and their intersection won’t be contained in any set in

and their intersection won’t be contained in any set in  ) Therefore, by the observation I made in this comment (a fact that I’m sure others were well aware of long before my comment) there are at most

) Therefore, by the observation I made in this comment (a fact that I’m sure others were well aware of long before my comment) there are at most  distinct sets

distinct sets  It follows that if we have a superpolynomial lower bound, then we must have a superpolynomial lower bound with the same

It follows that if we have a superpolynomial lower bound, then we must have a superpolynomial lower bound with the same  for every family. So an equivalent formulation of the problem would be to find a polynomial upper bound (or superpolynomial lower bound) for the number of

for every family. So an equivalent formulation of the problem would be to find a polynomial upper bound (or superpolynomial lower bound) for the number of  with the additional constraint that the union of every

with the additional constraint that the union of every  is the whole of

is the whole of ![[n].](https://s0.wp.com/latex.php?latex=%5Bn%5D.&bg=ffffff&fg=333333&s=0&c=20201002)

If that is correct, then I think it helps us to think about the problem, because it raises the following question: if the unions of all the set systems are the same, then what is going to give us a linear ordering? In Noam’s example, if you look at the popular there is no ordering — any triple of families that share a union has the desired relationship. A closely related question is one I’ve asked already: what is the upper bound for the number of families if you ask for

there is no ordering — any triple of families that share a union has the desired relationship. A closely related question is one I’ve asked already: what is the upper bound for the number of families if you ask for  to cover

to cover  for every

for every  ?

?

The case that the support of every family is [n], seems indeed enough and concentrating on it was also suggested by Yuri ( https://gilkalai.wordpress.com/2010/09/29/polymath-3-polynomial-hirsch-conjecture/#comment-3438 ). This restriction doesn’t seem so convenient for proving upper bounds, since the property disappears under “restrictions”. For example running the EHRR argument would reduce n by 1 without shortening the sequence at all but loosing the property.

Ah, thanks for pointing that out — I’m coming late to this discussion and have not been following the earlier discussion posts. For now I myself am trying to think about lower bounds (either to find one or to understand better why the conjecture might be true) so I quite like the support restriction because it somehow stops one from trying to make the “wrong” use of the ground set. It also suggests the following question that directly generalizes what you were doing in your example. Suppose we have three disjoint sets X,Y,Z of size n. How many families can we find with the following properties?

can we find with the following properties?

(i) For every and every

and every  the intersections

the intersections  and

and  all have size 1.

all have size 1.

(ii) Each is a partition of

is a partition of  into sets of size 3.

into sets of size 3.

(iii) For every and every

and every  and

and  there exists

there exists  such that

such that

(iv) No set belongs to more than one of the

If we do it for X and Y and sets of size 2 then the answer is easily seen to be n (and condition (iii) holds automatically), but for sets of size 3 it doesn’t seem so obvious, since each element of X can be in triples, but if we exploit that then we have to worry about condition (iii).

triples, but if we exploit that then we have to worry about condition (iii).

I haven’t thought about this question at all so it may have a simple answer. If it does, then I would follow it up by modifying condition (iii) so that it reads “For every and every

and every  and

and  there exists

there exists  such that

such that  “

“

I now see that Yuri’s comment was not from an earlier discussion thread — I just missed it because I was trying to think more about general constructions than constructions for small

Hi everyone,

Here’s another thought on handling the d=2 case (and perhaps the more general case):

Suppose we add the following condition on our collection of set families $F_i$:

(**) Each $(d-1)$-element subset of $[n]$ is active on at most two of the families $F_i$.

Here, I mean that a set $S$ is active on $F_i$ if there is a $d$-set $T \in F_i$ for which $T \supset S$.

This condition must be satisfied for polytopes — working in the dual picture, any codimension 1 face of a simplicial polytope is contained in exactly two facets.

So how does this help us in the case that $d=2$? Suppose $F_1,\ldots,F_t$ is a family of disjoint 2-sets on ground set $[n]$ that satisfies Gil’s property (*) and the above property (**)

Let’s count the number of pairs $(j,k) \in [n] \times [t]$ for which $j$ is active on $F_k$. Each $j \in [n]$ is active on at most two of the families $F_i$, and hence the number of such pairs is at most $2n$. On the other hand, since each family $F_k$ is nonempty, there are at least two vertices $j \in [n]$ that are active on each family $F_k$. Thus the number of such ordered pairs is at least $2t$.

Thus $t \leq n$, giving an upper bound of $f(n,2) \leq n-1$ when we assume the additional condition (**).

This technique doesn’t seem to generalize to higher dimensions without some sort of analysis of how the sizes of the set families $F_i$ grow as $i$ ranges from $1$ to $t$.

I think I can show f(4) is less than 9. We have it

must be less than 10 previously.

Assume we have 9 or more elements then there are 8 types of

sets in terms of which of the the first three elements are

in the set. We must have a repetition of the same type in

sets in two different families.

Then every set must contain an element

that contains the 3 elements of the repetition.

Now if the repetition is not null there can be

at most 8 elements that contain the repetition

but we have 7 families besides A and B and so there is a contradiction.

But we can improve this since we have two instances of the repetition

in the first two elements so we have at most 6 unused elements.

Now we can repeat this argument for each set of

four elements. so we have at most 5 families containing the

null set and each single element. And we have adding

one element not in a set in a family and having the resulting

augmented set outside the family is forbidden.

so outside of the 5 sets that contain

the singleton elements and the null set there are no

two element sets, no single element sets

and no null set. but that leaves 5 elements for 4

sets.

This means that each family must contain one of the

sets with more than two elements. We divide the proof into

two cases

In the first case one family must

contains the set with four elements. Then another family must consist of a single set with three elements. Then since their intersection will have three elements